The web of data is the network resulting from publishing structured data sources in RDF and interlinking these data sources with explicit links. A large quantity of structured data is being published particularly through the Linking Open Data project (http://esw.w3.org/topic/SweoIG/TaskForces/CommunityProjects/LinkingOpenData). web data sets are expressed according to one or more vocabularies or ontologies, which range from simple database schema exposure to full-fledged ontologies.

The web of data requires to interlink the various published data sources. Given the large amount of published data, it is necessary to provide means to automatically link those data. Many tools were recently proposed in order to solve this problem, each having its own characteristics.

In many cases, data sets containing similar resources are published using different ontologies. Hence, data interlinking tools need to reconcile these ontologies before finding the links between entities. This could be done automatically, but more often this is done manually and built in the link specifications. This has two drawbacks:

For that purpose, after briefly introducing the challenges of data interlinking and ontology matching, we review six data interlinking tools and the way they are built. From this analysis, we provide a general framework for data interlinking in which all these tools can be included. This framework clearly separates the data interlinking and ontology matching activities and we show how these can collaborate through three different languages for links, data linking specifications and ontology alignments. We provide examples of an expressive alignment language and a modified linking specification language that can implement this cooperation.

We briefly introduce linked data and the data interlinking problem. We provide examples of this problem and why it would require specific linking tools. We then present why these tools could take advantage of ontology matching and alignments.

The web of data is based on the following four principles [bernerslee:2009]:

As long as they follow these rules, linked data can be published in various ways (RDF data sets, SPARQL endpoints, XHTML+RDFa pages [adida2008b], databases exposed through HTTP [bizer:2003,sahoo2009a]). Web data sets can also be constructed collaboratively, through the use of specialised tools [volkel:2006]. Two ways of publishing data can be distinguished. On one side, the publication of data sets: they can originate from relational databases or XML documents, exported in RDF and described according to a web ontology . Another way is to directly include structured data as annotations in HTML pages, using the W3C standard RDFa In this work, we primarily consider methods and tools working with web data sets.

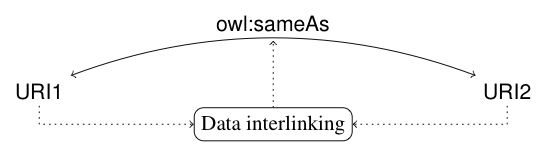

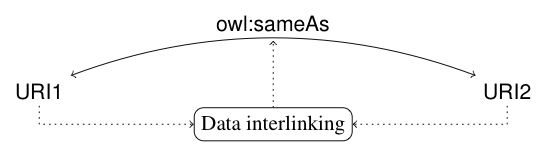

A main problem on the web of data is to create links between entities of different data sets. Most often, this consists of identifying the same entity across different data sets and publishing a link between them as a owl:sameAs statement (shortened as sameAs hereafter). We call this task data interlinking and summarise it in the following figure:

Once identified, the links discovered between two data sets must also be published in order to be reused. The VoiD vocabulary [alexander:2009] allows for describing linksets as special data sets containing sets of links between resources of two given data sets. A linkset is represented as an RDF named graph described using VoiD annotations, as shown in the RDF/N3 code below:

{

<http://www.example.org/linkset/DBPedia-MB>

a void:Linkset ;

void:target <http://www.dpbedia.org>;

void:target <http://www.musicbrainz.org>;

}

<http://www.example.org/linkset/DBPedia-MB>

{

<http://www.dbpedia.org/resource/Johann_Sebastian_Bach>

owl:sameAs

<http://www.musicbrainz.org/artist/24f1766e-9635-4d58-a4d4-9413f9f98a4c> .

}

Once linksets are constructed, two approaches are proposed to retrieve equivalences between resources: it is possible to assign to each real world entity a global identifier that will then be related to every URIs describing this entity. This is the approach taken in the OKKAM project [bouquet:2008] that proposes the usage of Entity Name Servers taking the role of resource name repositories. The other approach uses equivalence lists maintained with interlinked resources across data sets. There is thus no global identifier in this approach but equivalence links can be followed using a third-party web service, e.g., http://sameas.org, or a bilatteral protocol [volz2009a]. This approach is used by the RKB explorer [glaser:2008,jaffri:2008b].

The data interlinking task can be achieved manually or with the help of data interlinking tools. These tools take as input two data sets and ultimately provide a linkset. In addition, they use what we call a linking specification, i.e., a ``script'' specifying how and/or what to link. Indeed, given data set sizes, the search space for resources interlinking can reach several billion resources, e.g., DBpedia [bizer2009a]. It is thus necessary to use heuristics giving hints to the interlinking system about where to look for the corresponding resources in the two data sets. Some data sets being only available behind a SPARQL endpoint, it is interesting to note if the tools are able to work in this setting. An important step in using data interlinking tools is the linking specification step. These linking specifications can be specific to a pair of data sets and can be reused for regenerating linksets.

Mining for similar resources in two web data sets raises many problems. Each data sets having its own namespace, resources in different data sets are given different URIs. Also, although naming conventions exist [sauermann:2008], there is no formal nor standard way of naming resources. For example, if we take the URI for the famous musician Johann Sebastian Bach in various web data sets we obtain different results though they all represent the same real world object (see the following table).

| Data Set | URI |

| MusicBrainz | http://musicbrainz.org/artist/24f1766e-9635-4d58-a4d4-9413f9f98a4c |

| LastFM | http://www.last.fm/music/Johann+Sebastian+Bach |

| DBpedia | http://dbpedia.org/resource/Johann_Sebastian_Bach |

| OpenCyc | http://sw.opencyc.org/concept/Mx4rwJw6npwpEbGdrcN5Y29ycA |

As this example demonstrates, URIs are different across data sets, both because of their namespaces and because of their fragments. Fragments are generated according to two strategies: an internal ID as for MusicBrainz and OpenCyc, or the concatenation of some of the resource properties, as for LastFM and DBpedia. When the first strategy is used, an interlinking system might not be able to find correspondences between two resources by looking at URIs only.

However, in the case of MusicBrainz and OpenCyc, it is not possible to use this strategy. Fortunately, dereferencing URIs can be used for retrieving more information about entities: property values and related resources can be observed. But for the same real-world entity, the same property can take different values, making the interlinking process more difficult. This can be because of varying value approximations across data sets, because of different units of measure, because of mistakes in the data sets, or because of loose ontological specifications. For instance, the property foaf:name does not specify in what format should the name be given. ``J.S. Bach'', ``Bach, J.S.'' or ``Johann Sebastian Bach'' are possible values for this property. Hence, data interlinking tools have to compare property values in order to decide if two entities are the same, and must be linked, or not. For that purpose, tools use similarity measures based on the type of values, e.g., string, numbers, dates, and aggregate the results of these measures. This activity is reminiscent of record linkage which has been given considerable attention in database [fellegi:1969,winkler:2006,elmagarmid:2007]. The tools studied reuse many of the record linkage techniques.

Another problem is caused by the usage of heterogeneous ontologies for describing data sets. In this case, a same resource is typed according to different classes and described with different predicates belonging to different ontologies. For example, a name in a data set can be attributed using the foaf:name data property from the FOAF ontology while it is attributed using the vcard:N object property from the VCard ontology in another data set.

Hence, for the interlinking techniques to work, it is necessary that the data sets use the same ontology or that data interlinking tools are aware of the correspondences between ontologies.

Ontology matching allows for finding correspondences between ontology entities [euzenat:2007b]. The result of this process is called an ontology alignment. Once the ontologies matched, the alignment can be stored and retrieved when an application needs to use data described according to another ontology [euzenat:2007c].

Matching ontologies requires to overcome the mismatches originating from the different conceptualizations of the domains described by ontologies [visser:1997,klein:2001]. These mismatches may be of different nature: terminological mismatches concern differences of naming such as the usage of synonym terms for concept labeling; conceptual mismatches concern different conceptualizations of the domain such as structuring along different properties; structural mismatches concern heterogeneous structures, like different granularities in the class hierarchies. Ontology matching is similar to database schema matching [rahm:2001]. Specific works on ontology matching were proposed in the last ten years [noy:2000] that now reach maturity [euzenat:2007b]. It is not the purpose of this paper to describe the particular techniques.

While different URI constructions and variations of property values can find automatic solutions, the problem of having heterogeneous ontologies is in most interlinking tools solved by manually specifying the correspondences. This considerably complexifies the interlinking process. Ontology matching techniques can be used to facilitate the interlinking task and ontology alignments reused in linking specifications.

The goal of this work is to investigate the relationships between data interlinking and ontology matching. In particular, we want to understand if these two activities should be merged into a single activity and share the same formats or if there are good reasons to keep them separated. In the second perspective, we also want to establish how they can benefit from one another. For that purpose, we analyzed available systems for data interlinking.

François Scharffe and Jérôme Euzenat